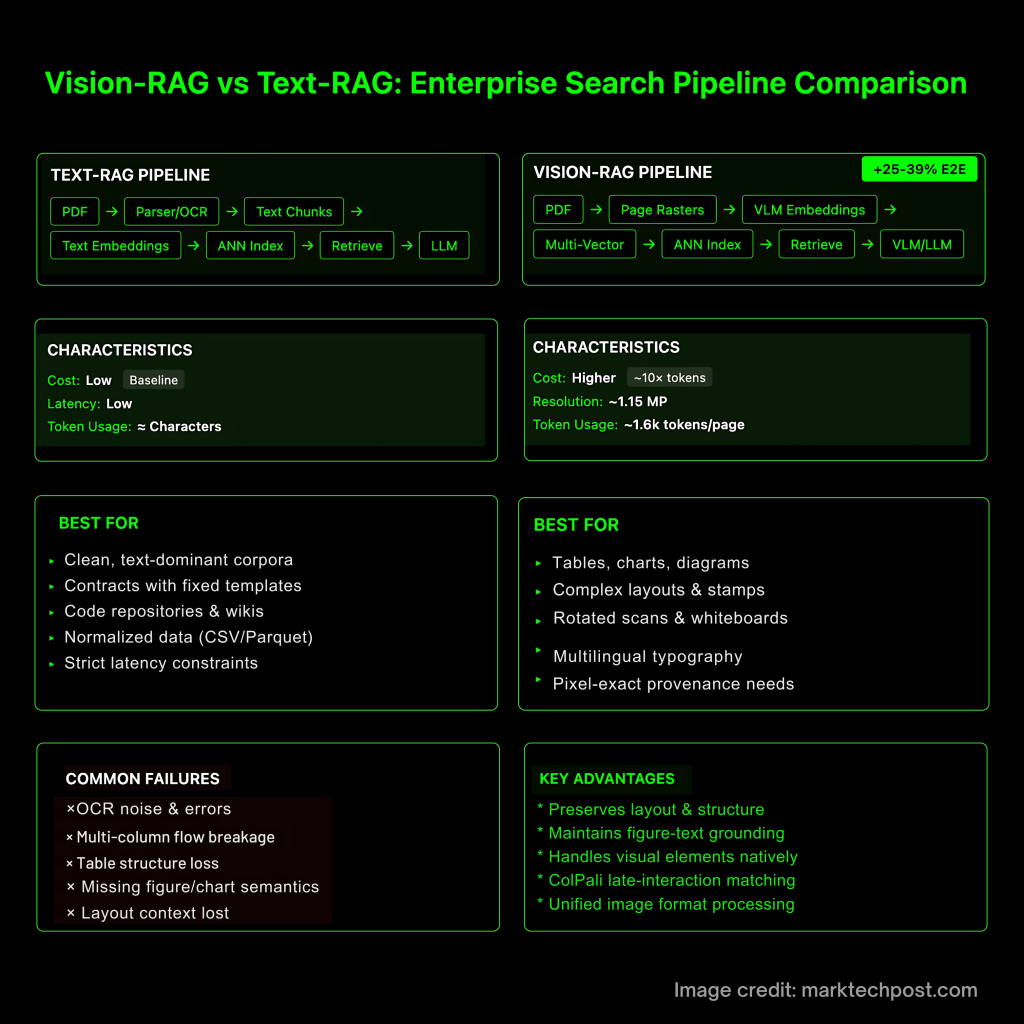

Most RAG failures originate at retrieval, not generation. Text-first pipelines lose layout semantics, table structure, and figure grounding during PDF→text conversion, degrading recall and precision before an LLM ever runs. Vision-RAG—retrieving rendered pages with vision-language embeddings—directly targets this bottleneck and shows material end-to-end gains on visually rich corpora.

Pipelines (and where they fail)

Text-RAG. PDF → (parser/OCR) → text chunks → text embeddings → ANN index → retrieve → LLM. Typical failure modes: OCR noise, multi-column flow breakage, table cell structure loss, and missing figure/chart semantics—documented by table- and doc-VQA benchmarks created to measure exactly these gaps.

Vision-RAG. PDF → page raster(s) → VLM embeddings (often multi-vector with late-interaction scoring) → ANN index → retrieve → VLM/LLM consumes high-fidelity crops or full pages. This preserves layout and figure-text grounding; recent systems (ColPali, VisRAG, VDocRAG) validate the approach.

What current evidence supports

- Document-image retrieval works and is simpler. ColPali embeds page images and uses late-interaction matching; on the ViDoRe benchmark it outperforms modern text pipelines while remaining end-to-end trainable.

- End-to-end lift is measurable. VisRAG reports 25–39% end-to-end improvement over text-RAG on multimodal documents when both retrieval and generation use a VLM.

- Unified image format for real-world docs. VDocRAG shows that keeping documents in a unified image format (tables, charts, PPT/PDF) avoids parser loss and improves generalization; it also introduces OpenDocVQA for evaluation.

- Resolution drives reasoning quality. High-resolution support in VLMs (e.g., Qwen2-VL/Qwen2.5-VL) is explicitly tied to SoTA results on DocVQA/MathVista/MTVQA; fidelity matters for ticks, superscripts, stamps, and small fonts.

Costs: vision context is (often) order-of-magnitude heavier—because of tokens

Vision inputs inflate token counts via tiling, not necessarily per-token price. For GPT-4o-class models, total tokens ≈ base + (tile_tokens × tiles), so 1–2 MP pages can be ~10× cost of a small text chunk. Anthropic recommends ~1.15 MP caps (~1.6k tokens) for responsiveness. By contrast, Google Gemini 2.5 Flash-Lite prices text/image/video at the same per-token rate, but large images still consume many more tokens. Engineering implication: adopt selective fidelity (crop > downsample > full page).

Design rules for production Vision-RAG

When Text-RAG is still the right default?

- Clean, text-dominant corpora (contracts with fixed templates, wikis, code)

- Strict latency/cost constraints for short answers

- Data already normalized (CSV/Parquet)—skip pixels and query the table store

Evaluation: measure retrieval + generation jointly

Add multimodal RAG benchmarks to your harness—e.g., M²RAG (multi-modal QA, captioning, fact-verification, reranking), REAL-MM-RAG (real-world multi-modal retrieval), and RAG-Check (relevance + correctness metrics for multi-modal context). These catch failure cases (irrelevant crops, figure-text mismatch) that text-only metrics miss.

Summary

Text-RAG remains efficient for clean, text-only data. Vision-RAG is the practical default for enterprise documents with layout, tables, charts, stamps, scans, and multilingual typography. Teams that (1) align modalities, (2) deliver selective high-fidelity visual evidence, and (3) evaluate with multimodal benchmarks consistently get higher retrieval precision and better downstream answers—now backed by ColPali (ICLR 2025), VisRAG’s 25–39% E2E lift, and VDocRAG’s unified image-format results.

References:

Michal Sutter is a data science professional with a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michal excels at transforming complex datasets into actionable insights.